News Nugget

Why this Matters

Quick Takes

Fundamentally, computing is all about controlling and managing information over time. Brains can compute, and so can your smartphone. The information that flows through both is fundamentally the same. Today we’re starting with the most commonly-used basic unit of computing: the doped silicon transistor.

And yes, like human types of doping, the silicon is doped with a little bit of something special that alters its normal behavior.

- Part I: The Mind of Matter, Delving into Consciousness

- Part II: The Hardware of Us, A Forest of Neurons

- Part III: The Anatomy of Machines, the Chemistry of Transistors

- Part IV: Transistor, Meet Neuron

Fundamentals of Computation: Control and Binaries

There are many ways to build a computer, so why do we use silicon? It all boils down to electrochemistry, the same rules that govern the neurons in our brains. Ultimately, whatever material we use, needs to be easy enough for humans to control — and binary enough to send out information in its most practical form, the 1 or 0.

Not enough control. Quantum computing.

For a long time, the smallest individual unit of matter was an atom and the smallest unit of information was a bit. That’s been challenged in the last century because once you get smaller than atoms, into the subatomic realm, the world begins to run on quantum mechanics, which exhibits “quantum weirdness” — when our intuition about the way the world works no longer applies at the quantum scale.

No longer are things binary, but rather information exists in qubits (“quantum bits”) which are the quantum spin states of various types of subatomic particles. In fact, in the quantum world, the mere act of knowing something negates your knowing it. Things no longer exist in binary, they exist in “both 1 and 0 at the same time” or “kind of 1, almost 0” — in other words, it’s weird. And it’s so small that we can’t really observe or control it. Yet.

Too much effort. Magnetic computing.

Magnetism is another way of computing that is very binary, but requires a huge amount of power to set the initial state. According to the rules of electromagnetism, where electricity generates a magnetic field and vice versa — you have to be really careful about having both things too close to each other. There are magnetic chips made of tantalum [1] that have very low power requirements, but because they are hard to manufacture at scale, they pale in comparison to existing silicon transistor chips.

So now we get to the answer, why silicon? And what is a transistor?

Physics of the Transistor: Silicon Doping

A transistor is the smallest unit of logic in a computer. It’s a switch that either allows electrons to flow through signaling a 1, or it’s disconnected and no electrons can go through and it’s a 0.

You can use many types of materials to make a switch, but the modern day digital revolution uses the marvelous material of doped silicon. With this material we have enough control over the manufacturing process to drive the cost and size of the silicon transistors down to the size of nanometers.

You could fit 10 state-of-the-art transistors onto the cell body of one neuron at the price of 10 cents per transistor [2]. Wowzas!

Silicon by itself, without the doping, forms a very stable crystal that is so perfectly arranged there is no room for electrons to flow through. Since electrons flow in the spaces between atoms, doping the silicon with a small amount of another material creates just enough space for electrons to travel.

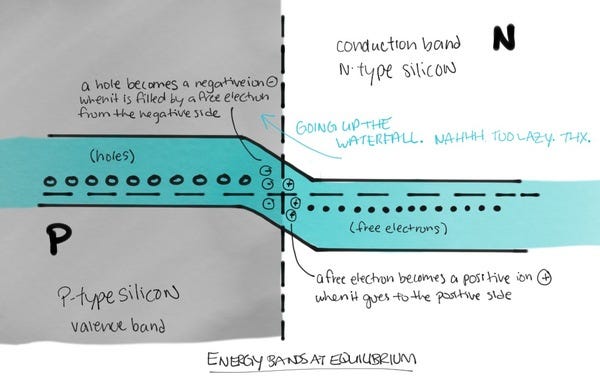

If you dope the silicon with a small amount of something that is either lacking or has an extra electron, then electrons can either be sucked into the empty spaces or holes which travel down the wire (positively charged or P-doping) or the electrons travel like a chain of dominoes down a structure which has a bunch of free-floating electrons (negatively charged or N-doping).

When current flows from N-doped to P-doped materials it’s like water flowing up a waterfall (see image below). It just doesn’t work unless it has some extra help to level the playing field. Going the other way though, it works just fine.

The entire backbone of transistors lies on a sandwich of these materials, either in a PNP or NPN configuration. This configuration blocks current in both directions, unless a small current is applied to the middle layer, which levels the field of flow allowing a much larger current to pass through.

This sandwiching of doped silicon creates a cheap, nanoscale, easily-controlled binary switch: what has become the fundamental unit of modern digital computing.

Hardware Becomes Logic: The Fundamental Logic Gates of Computing

Information processing is what happens when information evolves or changes over time. Either one unit of information changes (an inversion) or two units of information combine to form a third unit. Logic is what determines the outcome.

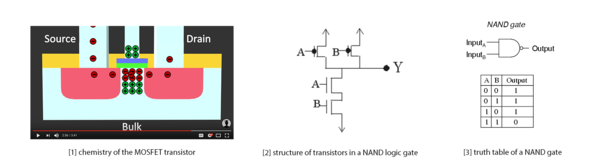

The basic unit of hardware and logic is the NAND operator [3], which is an arrangement of transistors and capacitors, things that store charge and generate voltage. The physical properties of the NAND gate make it such that applied voltages from two sources combine to form an output voltage, in the form of either high voltage as a 1 or low voltage as 0.

Modern-day computation lies on three pieces of logic: AND, OR, and NOT — which can all be made from the NAND logic gate. You can make multiple types of logic gates on top of the basic ones such as XOR and XNOR.

In fact, you can even design logic gates specifically used for machine learning which is what Google did with the Tensor Processing Unit (TPU) processor and TensorFlow, which drastically reduced power usage and increased speed such that one TPU with Google Photos can process over 100 million photos a day [4].

The Big Questions for Technologists

Transistors get smaller and smaller, but don’t really match with neurons yet. Neurons are like squishy, growing, constantly evolving transistors. Whereas a transistors have a one-to-one relationship with each other, neurons have a thousand-to-one relationship. Transistors also don’t evolve or change (for now), they are permanently molded into silicon board.

But as technology evolves, those constraints will evolve as well. Soon technologies such as quantum computing or neuromorphic chips [5] will break Moore’s Law which relies on the physics of transistors, producing quantum leaps (pardon the pun) in computational power.

When machines begin to resemble biology, what then?

References

[1] Small tilt in magnets makes them viable memory chips (Berkeley, 2015)

[2] Intel at ISSCC 2015: Reaping the Benefits of 14nm and Going Beyond 10nm

[3] From Physics to Logic (Northwestern)

[4] Google’s Tensor Processing Unit explained: this is what the future of computing looks like (2016)

[5] As Moore’s law nears its physical limits, a new generation of brain-like computers comes of age in a Stanford lab (Stanford, 2017)

Recommended Reading

News Nugget

Why this Matters

Quick Takes